Global Local Modeling#

When fitting a single forecasting model with shared weights using a dataset composed of many time series, we can achieve what is known as a global model. It is specially useful in cases in which a single time series may not reflect the entire time series dynamics. In addition, global models provide better generalization and model size saving.

However, in cases where many time series share only some behaviors, a global local model may be more appropriate. In this type of model, a single model with shared weights is used to capture the common behaviors across the time series, while some components are modeled separately for each time series.

In this notebook, we will demonstrate an example of Global Local Modelling by modeling trend and seasonality components separately for each time series in the ERCOT region’s hourly load dataset.

First, we load the data:

[1]:

if "google.colab" in str(get_ipython()):

# uninstall preinstalled packages from Colab to avoid conflicts

!pip uninstall -y torch notebook notebook_shim tensorflow tensorflow-datasets prophet torchaudio torchdata torchtext torchvision

!pip install git+https://github.com/ourownstory/neural_prophet.git # may take a while

#!pip install neuralprophet # much faster, but may not have the latest upgrades/bugfixes

import pandas as pd

from neuralprophet import NeuralProphet, set_log_level

from neuralprophet import set_random_seed

import numpy as np

set_random_seed(10)

set_log_level("ERROR", "INFO")

[2]:

data_location = "https://raw.githubusercontent.com/ourownstory/neuralprophet-data/main/datasets/"

df_ercot = pd.read_csv(data_location + "multivariate/load_ercot_regions.csv")

df_ercot.head()

[2]:

| ds | COAST | EAST | FAR_WEST | NORTH | NORTH_C | SOUTHERN | SOUTH_C | WEST | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 2004-01-01 01:00:00 | 7225.09 | 877.79 | 1044.89 | 745.79 | 7124.21 | 1660.45 | 3639.12 | 654.61 |

| 1 | 2004-01-01 02:00:00 | 6994.25 | 850.75 | 1032.04 | 721.34 | 6854.58 | 1603.52 | 3495.16 | 639.88 |

| 2 | 2004-01-01 03:00:00 | 6717.42 | 831.63 | 1021.10 | 699.70 | 6639.48 | 1527.99 | 3322.70 | 623.42 |

| 3 | 2004-01-01 04:00:00 | 6554.27 | 823.56 | 1015.41 | 691.84 | 6492.39 | 1473.89 | 3201.72 | 613.49 |

| 4 | 2004-01-01 05:00:00 | 6511.19 | 823.38 | 1009.74 | 686.76 | 6452.26 | 1462.76 | 3163.74 | 613.32 |

We extract the name of the regions which will be later used in the model creation.

[3]:

regions = list(df_ercot)[1:]

Global models can be enabled when the df input of the function has an additional column ‘ID’, which identifies the different time-series (besides the typical column ‘ds’, which has the timestamps, and column ‘y’, which contains the observed values of the time series). We select data from a three-year interval in our example (from 2004 to 2007).

[4]:

df_global = pd.DataFrame()

for col in regions:

aux = df_ercot[["ds", col]].copy(deep=True) # select column associated with region

aux = aux.iloc[:26301, :].copy(deep=True) # selects data up to 26301 row (2004 to 2007 time stamps)

aux = aux.rename(columns={col: "y"}) # rename column of data to 'y' which is compatible with Neural Prophet

aux["ID"] = col

df_global = pd.concat((df_global, aux))

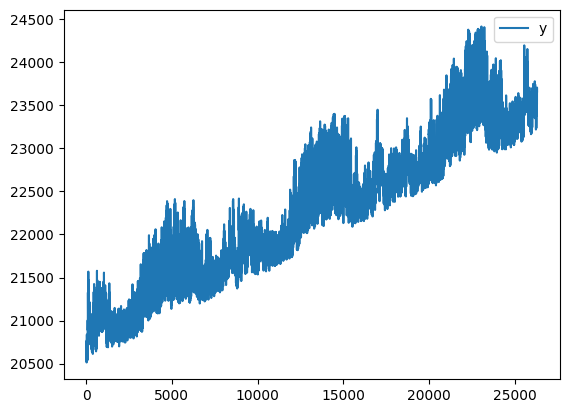

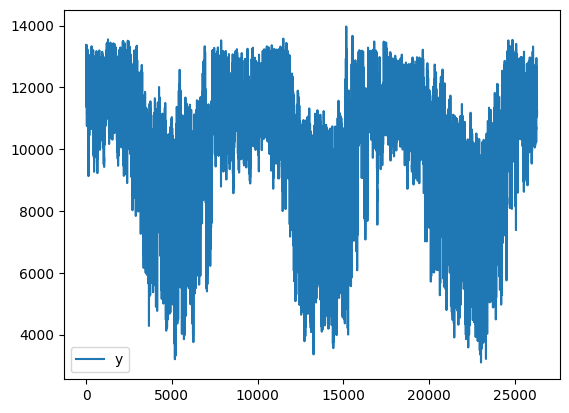

We will modify the trend for NORTH and the seasonality for COAST.

[5]:

df_global["y"] = (

np.where(df_global["ID"] == "COAST", -df_global["y"], df_global["y"])

+ 2 * df_global.loc[df_global["ID"] == "COAST", "y"].mean()

)

df_global["y"] = np.where(df_global["ID"] == "NORTH", df_global["y"] + 0.1 * df_global.index, df_global["y"])

df_global.loc[df_global["ID"] == "NORTH"].plot()

df_global.loc[df_global["ID"] == "COAST"].plot()

[5]:

<Axes: >

Global Modeling#

Remark: Training a time series only with trend and seasonality components can result in poor performance. The following example is used just to show the new local modelling of multiple time series functionality.

[6]:

m = NeuralProphet(

trend_global_local="global",

season_global_local="global",

changepoints_range=0.8,

epochs=20,

trend_reg=5,

)

m.set_plotting_backend("plotly-static")

When a pd.DataFrame with an ‘ID’ column is the input for the split_df function, train and validation data are provided in a similar format. For global models, the input data is typically split according to a fraction of the time encompassing all time series (default when there is more than one ‘ID’ and when local_split=False). If the user wants to split each time series locally, the local_split parameter must be set to True. In this example, we will split our data into train and test

(with a 33% test proportion - 2 years train and 1 year test).

[7]:

df_train, df_test = m.split_df(df_global, valid_p=0.33, local_split=True)

print(df_train.shape, df_test.shape)

(140976, 3) (69432, 3)

After creating an object of the NeuralProphet, a model can be created by calling the fit function.

[ ]:

metrics = m.fit(df_train, freq="H")

Ensure you provide data identified with the keys associated with the appropriate train time series. Therefore, suitable normalization data parameters are used in post-fitting procedures (i.e., predict, test).

[ ]:

future = m.make_future_dataframe(df_test, n_historic_predictions=True)

forecast = m.predict(future)

We will plot now the forecast time series and the parameters for: - NORTH: With an adjusted trend - COAST: With an adjusted seasonality - EAST: No changes to the original

North#

[10]:

m.plot(forecast[forecast["ID"] == "NORTH"])

[11]:

m.plot_parameters(df_name="NORTH")

South#

[12]:

m.plot(forecast[forecast["ID"] == "COAST"])

[13]:

m.plot_parameters(df_name="COAST")

East#

[14]:

m.plot(forecast[forecast["ID"] == "EAST"])

[15]:

m.plot_parameters(df_name="EAST")

Metrics#

[16]:

test_metrics_global = m.test(df_test)

test_metrics_global

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Runningstage.testing metric DataLoader 0

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Loss_test 0.12341304123401642

MAE_val 0.23543809354305267

RMSE_val 0.26708388328552246

RegLoss_test 0.0

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

[16]:

| MAE_val | RMSE_val | Loss_test | RegLoss_test | |

|---|---|---|---|---|

| 0 | 0.235438 | 0.267084 | 0.123413 | 0.0 |

Local Modelling of Trend and Seasonality#

We will repeat the process above, but for local modelling of trend and seasonality.

[18]:

m = NeuralProphet(

trend_global_local="local",

season_global_local="local",

changepoints_range=0.8,

epochs=20,

trend_reg=5,

)

m.set_plotting_backend("plotly-static")

[ ]:

metrics = m.fit(df_train, freq="H")

[ ]:

future = m.make_future_dataframe(df_test, n_historic_predictions=True)

forecast = m.predict(future)

North#

[21]:

m.plot(forecast[forecast["ID"] == "NORTH"])

[22]:

m.plot_parameters(df_name="NORTH")

Coast#

[23]:

m.plot(forecast[forecast["ID"] == "COAST"])

[24]:

m.plot_parameters(df_name="COAST")

East#

[25]:

m.plot(forecast[forecast["ID"] == "EAST"])

[26]:

m.plot_parameters(df_name="EAST")

Metric#

[27]:

test_metrics_local = m.test(df_test)

test_metrics_local

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Runningstage.testing metric DataLoader 0

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Loss_test 0.08480319380760193

MAE_val 0.18777026236057281

RMSE_val 0.220332533121109

RegLoss_test 0.0

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

[27]:

| MAE_val | RMSE_val | Loss_test | RegLoss_test | |

|---|---|---|---|---|

| 0 | 0.18777 | 0.220333 | 0.084803 | 0.0 |

Conclusion#

Comparing the local global model and the global model, we achieved a lower error with global local model.